Brief Overview of Docker and Virtual Machines

In the world of computing and software development, Docker and virtual machines (VMs) are two powerful technologies that enable the deployment and management of applications in isolated environments. Both Docker and VMs aim to solve similar problems but achieve their goals through fundamentally different approaches.

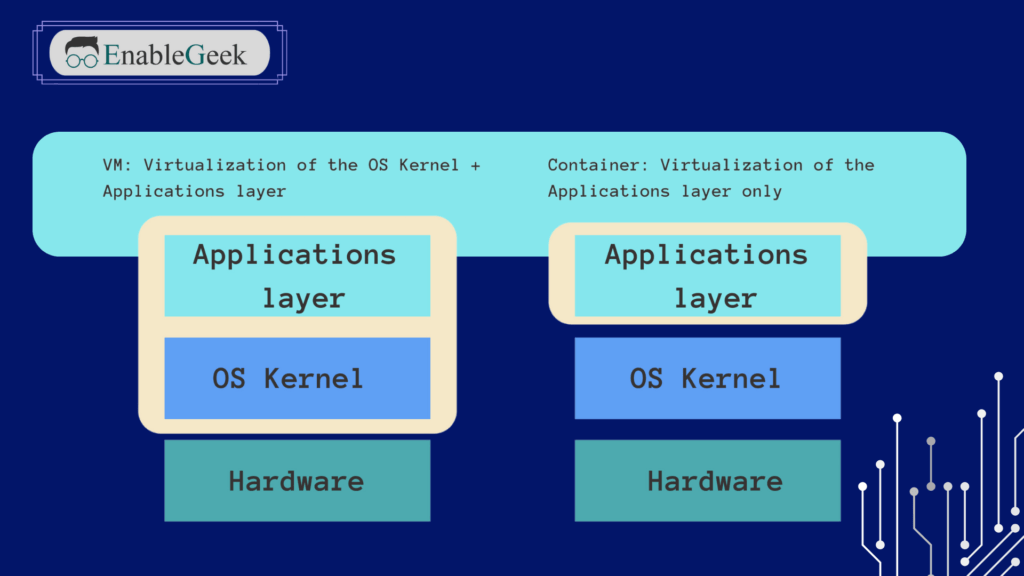

Docker is an open-source platform designed to automate the deployment, scaling, and management of applications within lightweight containers. Containers package the application code along with its dependencies, libraries, and configuration files, ensuring that the application runs consistently across various environments. Docker containers are highly efficient, as they share the host operating system’s kernel, which leads to faster startup times and lower resource consumption compared to traditional VMs.

Virtual Machines, on the other hand, create complete, isolated environments by virtualizing the underlying hardware. Each VM runs its own operating system, which can be different from the host operating system, along with the application and its dependencies. This approach provides a high level of isolation and security, as VMs are completely separate from each other and the host system. However, VMs tend to be heavier, requiring more system resources and longer startup times due to the overhead of running a full-fledged operating system for each VM.

Importance of Understanding the Differences

Understanding the differences between Docker and virtual machines is crucial for several reasons:

- Resource Efficiency: Knowing how each technology utilizes system resources can help in optimizing performance and cost. Docker’s lightweight nature makes it more suitable for environments where quick scalability and minimal overhead are essential.

- Deployment and Management: Different applications and environments have varying requirements. By understanding the strengths and weaknesses of Docker and VMs, you can choose the most appropriate technology for your specific use case, ensuring smoother deployment and management processes.

- Security Considerations: Security is a critical aspect of any application deployment. Both Docker and VMs offer different levels of isolation and security. Understanding these differences helps in implementing the right security measures to protect your applications and data.

- Scalability and Portability: Modern applications often need to scale rapidly and run consistently across multiple environments, such as development, testing, and production. Docker’s portability and rapid deployment capabilities make it a strong candidate for these scenarios, while VMs provide robust, isolated environments that can be beneficial for certain workloads.

- Cost Management: The cost implications of running Docker containers versus virtual machines can be significant. Efficient resource usage and the ability to run multiple containers on a single host can lead to cost savings, especially in large-scale deployments.

What is Docker?

Definition and Purpose

Docker is an open-source platform designed to automate the deployment, scaling, and management of applications using containerization. Containers package an application and its dependencies together, ensuring that it runs consistently across different computing environments. Docker provides a standardized unit of software, which includes everything needed to run the application: code, runtime, system tools, libraries, and settings.

The primary purpose of Docker is to enable developers to create, deploy, and run applications with ease, regardless of the environment in which they are executed. This isolation from the underlying infrastructure makes it easier to develop, test, and deploy applications rapidly and consistently.

Key Features and Benefits

- Lightweight Containers: Docker containers are lightweight and share the host operating system’s kernel, making them more efficient than traditional virtual machines, which each require a full operating system instance.

- Portability: Docker containers can run on any system that supports Docker, providing a consistent environment across different stages of development, testing, and production. This “write once, run anywhere” capability simplifies application deployment.

- Rapid Deployment and Scalability: Docker enables quick startup times for containers, allowing for rapid deployment and scaling of applications. Containers can be spun up or down in seconds, making it easy to scale applications to meet demand.

- Isolation and Security: Each Docker container runs in its isolated environment, ensuring that applications do not interfere with each other. This isolation also enhances security by containing any vulnerabilities within the container.

- Version Control and Component Reuse: Docker images can be versioned, and components can be reused across different projects, promoting consistency and reducing redundancy. Developers can pull specific versions of an image, ensuring that the same environment is recreated each time.

- DevOps Integration: Docker integrates well with DevOps practices, enabling continuous integration and continuous deployment (CI/CD). Docker’s compatibility with various CI/CD tools facilitates automated testing and deployment pipelines.

Common Use Cases

- Development and Testing: Developers use Docker to create consistent development environments that match production settings. This eliminates the “it works on my machine” problem, as the containerized application will run the same way in different environments.

- Microservices Architecture: Docker is ideal for deploying microservices, where each service runs in its container. This separation of services enhances modularity and allows for independent scaling and management.

- Continuous Integration and Continuous Deployment (CI/CD): Docker streamlines the CI/CD process by ensuring that applications run consistently across different stages of the pipeline. Automated testing, integration, and deployment can be efficiently handled using Docker containers.

- Cloud Migration: Docker facilitates the migration of applications to the cloud by ensuring that they run consistently across different cloud providers. This reduces the complexity of adapting applications to different cloud environments.

- Hybrid and Multi-Cloud Deployments: Organizations can deploy Docker containers across multiple cloud providers and on-premises environments, ensuring flexibility and avoiding vendor lock-in. Docker’s portability makes it easy to manage and orchestrate applications across diverse infrastructure.

- Legacy Application Modernization: Docker helps in containerizing legacy applications, making it easier to manage and deploy them in modern infrastructure. This approach extends the life of legacy applications and integrates them into contemporary CI/CD workflows.

Architecture Differences

Docker’s Container-Based Architecture

Docker uses a container-based architecture to deliver software in isolated environments. Here’s a breakdown of its key components:

- Containers: Containers are lightweight, portable, and isolated environments that package an application and its dependencies together. They share the host system’s kernel but run in isolated user spaces, providing a consistent environment across various stages of development and deployment.

- Docker Engine: The Docker Engine is the runtime that enables containers to run on a host operating system. It includes:

- Docker Daemon: The core service responsible for managing containers, images, networks, and storage.

- Docker Client: The command-line interface (CLI) tool that communicates with the Docker daemon to execute commands like building, running, and managing containers.

- REST API: The API used by the Docker client and other applications to interact with the Docker daemon programmatically.

- Docker Images: Images are read-only templates used to create containers. They include the application code, runtime, libraries, and dependencies. Docker images can be shared and stored in Docker registries.

- Docker Registries: Registries are repositories where Docker images are stored and distributed. Docker Hub is the default public registry, but private registries can also be used for specific organizational needs.

- Namespaces and Control Groups (cgroups): These are Linux kernel features used by Docker to provide isolation. Namespaces provide isolation for system resources (e.g., processes, network), while cgroups limit and monitor resource usage (e.g., CPU, memory).

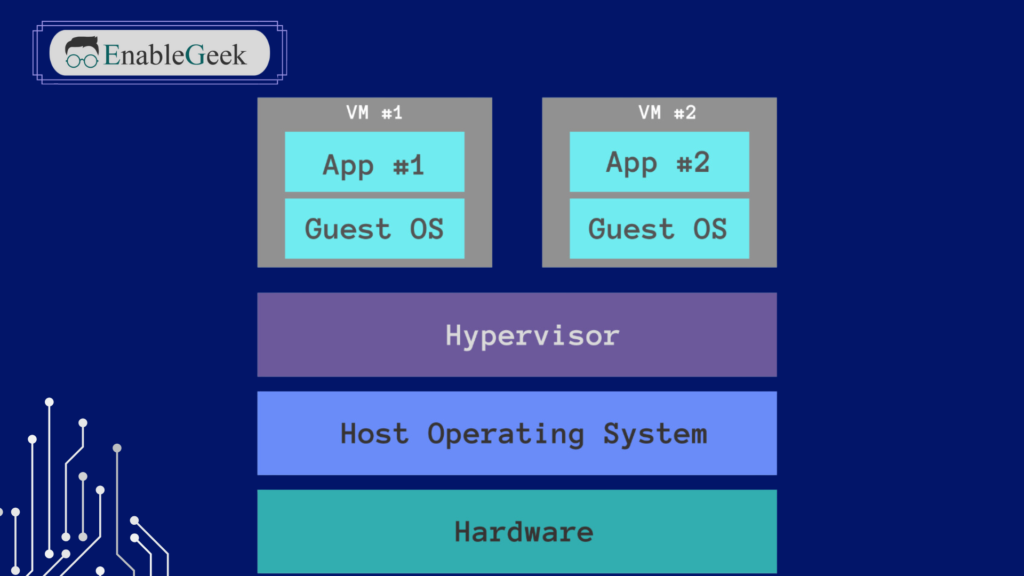

Virtual Machine’s Hypervisor-Based Architecture

Virtual Machines (VMs) rely on a hypervisor-based architecture to create fully isolated environments. Here’s a breakdown of its key components:

- Hypervisor: The hypervisor, also known as a Virtual Machine Monitor (VMM), is a software layer that allows multiple VMs to run on a single physical machine. There are two types of hypervisors:

- Type 1 (Bare-Metal): Runs directly on the host’s hardware (e.g., VMware ESXi, Microsoft Hyper-V, Xen).

- Type 2 (Hosted): Runs on top of a host operating system (e.g., VMware Workstation, Oracle VirtualBox).

- Virtual Machines: Each VM includes its own operating system (guest OS), applications, libraries, and dependencies. VMs are fully isolated from each other and the host system.

- Virtual Hardware: The hypervisor emulates hardware components such as CPU, memory, storage, and network interfaces for each VM. This allows VMs to run any operating system supported by the emulated hardware.

- Guest OS: Each VM has its own guest operating system, which can be different from the host OS. This allows for running multiple OS types on a single physical machine.

- Resource Allocation: The hypervisor manages the allocation of physical resources (e.g., CPU, memory) to each VM, ensuring isolation and controlled resource distribution.

Comparison of Resource Utilization

- Overhead:

- Docker: Containers share the host OS kernel, which leads to minimal overhead. This makes containers lightweight and efficient in terms of resource usage. Docker containers typically start up in seconds.

- VMs: Each VM requires a full operating system, which introduces significant overhead. This includes the guest OS and the virtual hardware emulation by the hypervisor. VMs generally take longer to start due to the need to boot the guest OS.

- Resource Isolation:

- Docker: Uses namespaces and cgroups for resource isolation. While effective, this approach shares the kernel, which can potentially expose the host OS to security vulnerabilities if not properly managed.

- VMs: Provide strong isolation as each VM runs a separate OS with its own kernel. This isolation is at the hardware level, making it more secure and less prone to cross-VM vulnerabilities.

- Scalability:

- Docker: Highly scalable due to its lightweight nature. Multiple containers can be run on a single host with minimal resource consumption. Docker’s quick startup times also facilitate rapid scaling.

- VMs: Less scalable compared to Docker. Each VM consumes more resources, and the overhead of running multiple full OS instances limits the number of VMs that can efficiently run on a single host.

- Performance:

- Docker: Typically offers better performance due to lower overhead and direct access to the host OS resources. Containers can achieve near-native performance for many workloads.

- VMs: Performance is impacted by the hypervisor overhead and the need to emulate hardware. This can result in slower performance compared to containers, especially for I/O-intensive operations.

Performance Comparison

Startup Time and Resource Efficiency

Docker:

- Startup Time:

- Fast Initialization: Docker containers typically start in a matter of seconds because they share the host OS kernel and do not require a separate operating system to boot.

- Efficiency: The lightweight nature of containers contributes to their rapid startup, making them ideal for environments where quick scaling and dynamic deployment are crucial.

- Resource Efficiency:

- Shared Kernel: Containers share the host OS kernel, which reduces the need for duplicating system resources. This leads to more efficient use of CPU, memory, and storage.

- Lower Resource Consumption: Since containers do not require a full OS instance, they consume significantly fewer resources compared to virtual machines. This allows for running many more containers on a single host compared to VMs.

Virtual Machines (VMs):

- Startup Time:

- Longer Initialization: VMs take longer to start because each VM must boot a full operating system. This process can take from several seconds to a few minutes, depending on the OS and hardware.

- Complex Boot Sequence: The startup involves initializing virtual hardware components and loading the guest OS, contributing to the delay.

- Resource Efficiency:

- Dedicated OS Instances: Each VM runs a complete OS instance, which duplicates system resources and leads to higher consumption of CPU, memory, and storage.

- Heavier Resource Footprint: The need for virtual hardware emulation and a separate OS for each VM increases the overall resource footprint, limiting the number of VMs that can be efficiently run on a single host.

Overhead and Performance Impact

Docker:

- Overhead:

- Minimal Overhead: Docker containers have minimal overhead because they leverage the host OS kernel and do not require virtualized hardware. This lightweight architecture results in efficient performance.

- Low Latency: Containers have low latency in terms of network and I/O operations due to direct access to the host’s system resources.

- Performance Impact:

- Near-Native Performance: Containers can achieve near-native performance for many workloads, particularly those that are compute or memory-intensive. The absence of a hypervisor layer reduces the performance impact.

- Efficient Resource Allocation: Docker’s use of namespaces and cgroups allows for efficient resource allocation and management, ensuring optimal performance across containers.

Virtual Machines (VMs):

- Overhead:

- Significant Overhead: VMs incur significant overhead due to the need for a hypervisor to manage and emulate virtual hardware. Each VM also runs a complete OS instance, adding to the resource usage.

- Resource Duplication: The duplication of system resources for each VM, including the guest OS and virtualized hardware, results in higher overhead compared to Docker containers.

- Performance Impact:

- Hypervisor Latency: The presence of a hypervisor introduces additional latency in network and I/O operations. This can impact the performance of applications running inside VMs.

- Isolated Performance: While VMs provide strong isolation, the performance of individual VMs can be impacted by the resource demands of other VMs running on the same host. The need to manage and allocate resources among multiple VMs can also affect overall system performance.

Isolation and Security

Isolation Mechanisms in Docker

1. Namespaces:

- Process Isolation: Docker uses Linux namespaces to provide isolation for containers. Namespaces ensure that a container’s processes, network interfaces, and file systems are separated from those of other containers and the host system.

- Types of Namespaces:

- PID Namespace: Isolates the process ID number space, ensuring that processes in different containers have unique PIDs.

- NET Namespace: Isolates network interfaces, IP addresses, and routing tables, providing network isolation for containers.

- IPC Namespace: Isolates inter-process communication resources, such as shared memory and semaphores.

- MNT Namespace: Isolates the filesystem mount points, ensuring that containers have their own root filesystem.

- UTS Namespace: Isolates the hostname and domain name, allowing containers to have unique hostnames.

2. Control Groups (cgroups):

- Resource Limitation: cgroups are used to limit and monitor the resource usage (CPU, memory, I/O) of containers. This prevents a single container from consuming excessive resources and affecting the performance of other containers or the host system.

3. Union File System (UnionFS):

- Layered Filesystem: Docker uses UnionFS to create container images. This allows multiple layers to be stacked, making images lightweight and fast to build. Changes are written to a new layer, preserving the underlying layers as read-only.

4. Security Modules:

- AppArmor, SELinux, and Seccomp: Docker can be integrated with security modules like AppArmor, SELinux, and Seccomp to enforce security policies and restrict the actions that containers can perform.

Isolation Mechanisms in Virtual Machines

1. Hypervisor:

- Complete Isolation: The hypervisor (Virtual Machine Monitor) provides strong isolation between VMs by virtualizing hardware resources. Each VM operates as if it has its own dedicated hardware, completely separated from other VMs and the host system.

- Types of Hypervisors:

- Type 1 (Bare-Metal): Runs directly on the host’s hardware (e.g., VMware ESXi, Microsoft Hyper-V, Xen).

- Type 2 (Hosted): Runs on top of a host operating system (e.g., VMware Workstation, Oracle VirtualBox).

2. Guest OS:

- Independent Operating Systems: Each VM runs its own guest operating system, providing an additional layer of isolation. The OS kernel, drivers, and system resources are isolated from those of other VMs and the host.

3. Virtual Hardware:

- Emulated Hardware: The hypervisor emulates virtual hardware components (CPU, memory, network interfaces, storage), ensuring that each VM has isolated and dedicated resources.

Security Considerations for Both

Docker:

- Kernel Shared:

- Shared Kernel Risks: Since containers share the host OS kernel, a vulnerability in the kernel can potentially be exploited to affect other containers or the host system.

- Security Best Practices:

- Least Privilege: Run containers with the least privileges necessary to reduce the risk of exploitation.

- Image Security: Use trusted, official Docker images and regularly scan images for vulnerabilities.

- Isolation Enhancements: Use security modules like AppArmor, SELinux, and Seccomp to enhance container isolation and restrict capabilities.

- Network Security:

- Isolated Networks: Use Docker networks to isolate container communications. Employ network policies and firewalls to control traffic between containers and the outside world.

- Updates and Patches:

- Regular Updates: Keep the Docker Engine and container images updated with the latest security patches to mitigate vulnerabilities.

Virtual Machines:

- Strong Isolation:

- Hardware-Level Isolation: VMs provide strong isolation at the hardware level, making them less susceptible to attacks that exploit the shared kernel.

- Security Best Practices:

- Hardened Hypervisor: Ensure the hypervisor is secure and up-to-date with patches to prevent attacks that target the hypervisor itself.

- Segmentation: Use network segmentation and firewalls to control VM-to-VM and VM-to-host communications.

- Guest OS Security:

- Patch Management: Regularly update and patch the guest OS and applications within each VM to mitigate vulnerabilities.

- Antivirus and Monitoring: Employ antivirus software and monitoring tools within VMs to detect and respond to threats.

- Resource Allocation:

- Resource Limits: Configure resource limits and reservations to prevent one VM from monopolizing hardware resources, ensuring fair distribution and performance.

Scalability and Portability

How Docker Handles Scalability and Portability

Scalability:

- Horizontal Scaling:

- Efficient Resource Usage: Docker containers are lightweight and have minimal overhead, allowing for efficient horizontal scaling. Multiple containers can be deployed across various nodes to distribute the load and handle increased traffic.

- Docker Swarm: Docker Swarm is Docker’s native clustering and orchestration tool. It enables the management of a cluster of Docker nodes as a single system, allowing for easy scaling of applications by adding or removing nodes.

- Kubernetes: Kubernetes, a popular container orchestration platform, can manage and scale Docker containers across a cluster of machines. Kubernetes automates the deployment, scaling, and management of containerized applications.

- Load Balancing:

- Service Discovery: Docker Swarm and Kubernetes provide service discovery and built-in load balancing, ensuring that requests are evenly distributed across containers.

- Auto-Scaling: Kubernetes supports auto-scaling based on metrics like CPU and memory usage, allowing the system to automatically adjust the number of running containers based on demand.

Portability:

- Consistent Environment:

- Write Once, Run Anywhere: Docker containers package an application and its dependencies, ensuring that it runs consistently across different environments (development, testing, production). This eliminates issues related to environment differences.

- Docker Images: Docker images can be easily shared and transported via Docker registries, making it simple to move applications between different environments or cloud providers.

- Cross-Platform Compatibility:

- Multi-Platform Support: Docker supports multiple operating systems and architectures, including Windows, macOS, and various Linux distributions, enhancing the portability of applications.

- Cloud-Agnostic: Docker containers can run on any platform that supports Docker, including major cloud providers like AWS, Azure, Google Cloud, and on-premises environments.

How Virtual Machines Handle Scalability and Portability

Scalability:

- Vertical Scaling:

- Resource Allocation: Virtual machines can be vertically scaled by allocating more CPU, memory, and storage resources to an existing VM. This approach is often limited by the physical hardware capacity.

- Dynamic Resource Adjustment: Some hypervisors support dynamic resource allocation, allowing VMs to adjust resource usage on the fly based on demand.

- Horizontal Scaling:

- Cloning and Templates: New VMs can be quickly deployed using templates or cloning existing VMs. This facilitates horizontal scaling by adding more VM instances to handle increased load.

- Orchestration Tools: Tools like VMware vSphere, Microsoft System Center, and OpenStack provide orchestration capabilities to manage and scale VM environments efficiently.

Portability:

- VM Templates and Snapshots:

- Consistent Deployment: VM templates and snapshots allow for consistent deployment and replication of VMs across different environments. These can be used to quickly spin up new instances with pre-configured settings and software.

- Hypervisor Compatibility:

- Live Migration: Many hypervisors support live migration, allowing VMs to be moved between physical hosts with minimal downtime. This enhances portability within the same virtual infrastructure.

- Cross-Hypervisor Migration: Tools and services are available to facilitate the migration of VMs between different hypervisors and cloud providers, although this process can be complex and requires careful planning.

- Cloud Integration:

- Hybrid Cloud: VMs can be integrated into hybrid cloud environments, combining on-premises and cloud resources. Cloud providers offer services to import and run VMs, providing flexibility in deployment.

Real-World Examples

Docker:

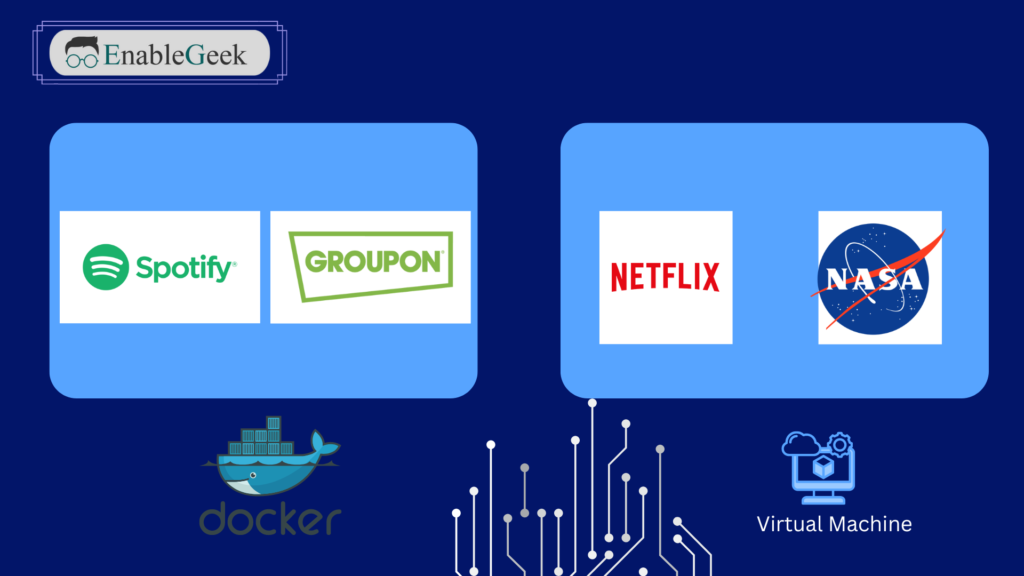

- Spotify:

- Microservices Architecture: Spotify uses Docker to deploy its microservices architecture. Containers enable quick scaling and consistent deployment across various environments, allowing Spotify to handle high traffic volumes and rapidly roll out new features.

- Groupon:

- Global Infrastructure: Groupon uses Docker to manage its global infrastructure, running thousands of containers in production. Docker’s portability and scalability allow Groupon to efficiently manage resources and deploy applications across multiple data centers.

Virtual Machines (VMs):

- Netflix:

- High Availability: Netflix uses a combination of VMs and containers to ensure high availability and scalability. VMs provide robust, isolated environments for critical services, while containers enable rapid deployment and scaling of microservices.

- NASA:

- Scientific Computing: NASA utilizes virtual machines for scientific computing and data analysis. VMs provide the necessary isolation and resource allocation to handle intensive computational tasks and large datasets, with the ability to scale vertically and horizontally as needed.