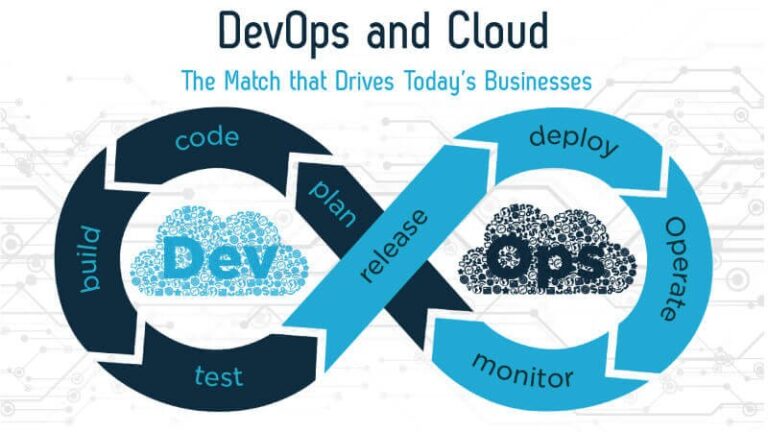

The technology that makes clouds function is often referred to as “cloud computing.” This comprises some type of virtualized IT infrastructure, such as servers, operating systems, networking, and other infrastructure that has been abstracted using specialized software to allow pooling and dividing without regard to physical hardware boundaries. One hardware server, for instance, could be split up into several virtual servers.

Cloud service companies can utilize the full potential of their data center resources thanks to virtualization. It should come as no surprise that many businesses have chosen the cloud delivery model for their on-premises infrastructure to get the most out of it, save money over traditional IT infrastructure, and provide the same level of self-service and agility to their customers.

How does serverless computing work

In a cloud-based execution model known as serverless or serverless computing, cloud service providers operate their servers and offer on-demand machine resources without the assistance of users or developers. It is a method that integrates services, approaches, and techniques to assist developers in creating cloud-based applications by allowing them to concentrate on their code rather than server administration.

The cloud services provider, such as AWS or Google Cloud Platform, has full responsibility for managing routine infrastructure operations, including resource allocation, capacity planning, management, configurations, and scaling, as well as patches, updates, scheduling, and maintenance. Developers may now focus their time and energy on creating the business logic for their processes and applications.

This serverless computing design only uses short bursts of processing rather than storing compute resources in volatile memory. No resources will be allotted to an application if you aren’t utilizing it. As a result, you only pay for the resources that you use on apps.

The serverless architecture was developed primarily to make the process of deploying code to production easier. It frequently also functions with conventional designs like microservices. When serverless technology is implemented, the applications it supports may instantly respond to user requests and scale up or down automatically as necessary.

An event-driven model is used by serverless computing to calculate scalability needs. Therefore, when deciding how many servers or how much bandwidth they need, developers no longer have to predict how an application would be used. Without making a reservation first, you can request extra servers and bandwidth to meet your growing needs and scale back at any moment without any problems.

Attributes of Serverless Computing

The following are some characteristics of serverless computing:

- Single functions and short pieces of code make up the majority of serverless applications.

- It scales fluidly based on demand and only executes code when necessary, typically in a stateless software container.

- Customers are not required to manage the servers.

- Features event-based execution, where the computer environment is designed to carry out the request as soon as a function is called or an event is received.

- Flexible scaling allows for simple scaling up or scaling down. The infrastructure stops operating after a code has been run, saving money. Similarly to that, you can scale up endlessly as necessary while the function is still running.

- You may manage complicated operations like file storage, queuing, databases, and more with managed cloud services.

Serverless Computing Features

- Reduced latency: Since apps are not hosted on a single origin server, you can run the code from any location. You can run app functions on a server close to ending users if the cloud provider you picked supports it. As a result, there is less latency because the user requests are closer to the server.

- Efficiency in development: In a serverless setting, developers may concentrate on their primary responsibilities rather than working out the underlying technology required to run and support their programs. Developers may now create applications more quickly than they could in conventional settings.

- Dynamic scaling: A serverless environment makes it easier to scale dynamically. Many serverless companies, such as Red Hat OpenShift Serverless, employ the Knative open-source framework. Scaling may be done depending on actual user demand, to and from zero, as Knative provides a container-based serverless solution on top of Kubernetes.

- Simpler resource management: Server-related tasks, including provisioning, scaling, and managing the infrastructure needed to execute the application, are offloaded from end users and carried out automatically in the background. Thus, one of the major benefits of a serverless architecture is the ease of resource allocation.

- Daisy Chaining: The configuration of functions to call one another or to offload different cloud services or infrastructures. Additionally, by combining FaaS, PaaS, and IaaS, a complicated processing pipeline might be developed much more quickly and easily.

Serverless Computing Use Cases

The serverless architecture is appropriate for a variety of use cases because of its distinctive qualities, including:

- API back-ends: With serverless platforms, any function may be quickly transformed into a client-ready HTTP endpoint. When enabled on the web, these features or activities are referred to as web actions. And once these are enabled, putting the functions together into a complete API is simple. To add further security, domain support, rate limitation, and OAuth support, you can also use a good API gateway.

- Batch/stream processing: Using Apache Kafka as a database and FaaS as a streaming service, you can build robust streaming apps and data pipelines. The serverless paradigm is appropriate for a variety of stream ingestions, including data from IoT sensors, business logic, financial market, and app logs.

- Applications for mobile and IoT: Mobile and Internet of Things applications that require the dynamic allocation of resources depending on demand are excellent candidates for serverless computing. Developers can create scalable and responsive applications by using functions that can be triggered by events in mobile apps or interactions with IoT devices.

- ETL and data processing: Data processing and Extract, Transform, and Load (ETL) procedures can be done on serverless platforms. To do data transformations, aggregations, or imports/exports, functions can be activated by scheduled tasks or database events.

Serverless Computing is a cloud-based execution model that allows cloud service providers to operate their servers and offer on-demand machine resources without the assistance of users or developers. It features event-based execution, flexible scaling, reduced latency, efficiency in development, and dynamic scaling. It is suitable for use cases such as API back-ends, batch/stream processing, mobile and IoT applications, ETL and data processing, and mobile and IoT applications. Functions can be triggered by scheduled tasks or database events.